Pegasus Enhancement Proposal (PEP)

PEP #: 130

Title: Remote CMPI support

Version: 1.2

Created: 11 February 2004

Authors: Adrian Schuur

Status: draft

Version History:

| Version |

Date |

Author |

Change Description |

| 1.0 |

11 Feb 2004

|

Adrian Schuur |

Initial Submission |

| 1.1 |

25 Apr 2004 |

Adrian Schuur |

Added rationale for use of namespaces to define remote

locations

Introduced use of CIM_SystemIdentification and CIM_SystemInNamespace to

designate remote namespaces

Added rationale for support for generic Namespace definition in

PG_ProviderCapabilities

Reworked Namespace Integrity enforcement section

Added details about daemon life cycle control.

Added entries to Discussion section

|

1.2

|

29 Apr 2004

|

Adrian Schuur

|

Added re-licensing clause

Added explanation about remote provider registration.

Added configuration switch support

|

Abstract: Modifications

required in Pegasus to effectively support Remote CMPI

Short Introduction to the Remote CMPI

Concepts

Typical environments where Remote CMPI can be deployed are clusters

of

individual elements to be managed. Usually the elements are constrained

in one way or an other, they might be all electronic door locks of a

building complex (small footprint and processor), all processors

in

high performance compute cluster (no cycles left for a full blown

CIMOM), or blades in a blade complex (cycle/space constrained,

management

constrained). In all those cases we talk about potentially large

numbers

of like elements to be manged for which it does not make sense to

replicate and maintain a complete Pegasus environment on each element.

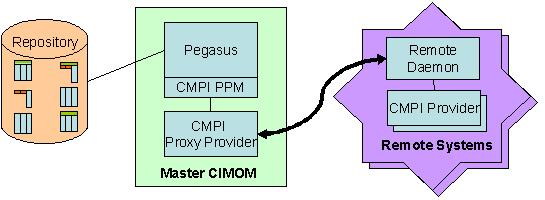

In a Remote CMPI environment one will typically find one master CIMOM

driving

providers on remote locations, in principle one can have backup

CIMOM(s)

in case the current master fails (this would require more function than

is proposed in this PEP). The master CIMOM server acts as a central

management acces point. The access point for instrumentation is a

specialized daemon at the remote location. The single daemon will drive

any of the providers registered for this remote namespace. The runtime

environment for a CMPI provider plus deamon is very small.

CMPI encapsulates all interactions with a CIMOM and its artifacts via

simple ANSI C structs organized as function tables. So getting a

property from an instance goes via a short stub to the actual CIMOM API

to get the particular property. The implementation of the stub is CIMOM

dependent, for Pegasus short sequences of code invoke

regular Pegasus APIs. For Java based CIMOMs, the stub will use JNI

techniques to interface to the appropriate Java class interfaces. A

CMPI style provider is not aware of this, it only sees and uses the

function tables to get things done, the respective CIMOM makes sure it

uses the matching stubs. Remote CMPI support exploits this concept by

setting up the function tables such that a communication layer is

involved where the remoteness has to be bridged. This does not mean

that

every API invocation results in network traffic, suffice to say at this

point that network traffic in most cases only occurs when a provider is

invoked and when it returns back to the CIMOM.

The question is, how from a client

perspective, can one CIMOM enable transparent access to the resources

of the

individual elements to be managed. From a client perspective the CIMOM

is addressed by specifying a hostname and port

address, the actual object to work with is defined by object class name

and the name space where the object is part of, and keys if it is an

instance. Remote CMPI contains a

function, Location Resolver, that is used to decide at, or for, which

location this request should be executed. Location Resolution will be

based

on the namespace name definition. Namespace based location resolution

requires a namespace for each

remote location. This is not just for resolution reasons, remote

locations might have their own static instances, the namespace will be

used for this as well.

This approach is chosen because it is the least obtrusive way to

implement this kind of support and because it can be implemented using

existing architectural ingredients already available.

CIM_SystemIdentification and CIM_SystemInNamespace instances will be

used to designate namespaces as remote namespaces; the first will hold

the network address of the remote location that is represented by a

particular namespace, the second is an association tying CIM_Namespace

and CIM_SystemIdentification. SLP entries will be generated for for the

remote locations participating in this scheme; these entries will

contain the master CIMOM hostname and port(s), a additional entry

defines the namespace to be used. This approach has an additional

advantage: it hides the actual location address, changing

CIM_SystemIdentification is the only change needed when systems move to

other locations.

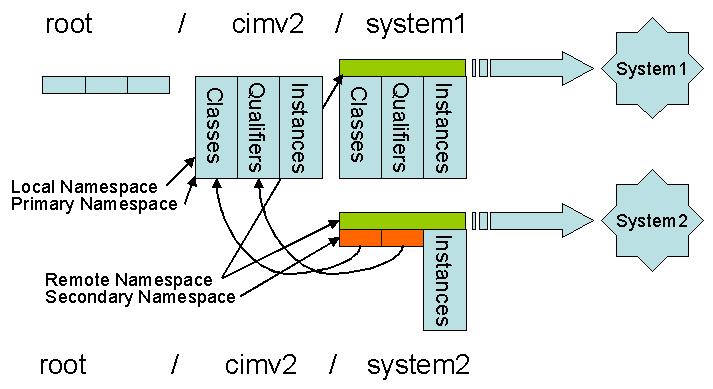

When large numbers of remote locations are the managed, shared schema

namespaces would be ideal, but not required. One can

imagine the following "namespace topologies" by just looking at the

names root/cimv2/system1,

root/cimv2/system2, root/cimv2/system3, etc.

Namespaces as these do not imply instance hierarchies, they are used to

isolate names and the resources associated with those names.

The picture above shows 3

useful namespaces, root/cimv2, root/cimv2/system1 and

root/cimv2/system2, the latter two being remote namespaces. Notice that

root/cimv2/system2 also is a secondary schema namespace associated with

root/cimv2; it could have been equally as well associated with

root/cimv2/system1 instead.

Approximately 95% of Remote CMPI is implemented as a generic CMPI

provider, the proxy, and the remote daemon, 3% in the CMPI Provider

Manager, 2% in mainline Pegasus. In essence what is needed from Pegasus

is the possibility to define remote namespaces using

CIM_SystemIdentification and CIM_SystemInNamespace instances. The CMPI

Provider Manager needs

to determine whether a request is targeted for a remote

namespace, if so it will not load the provider, but instead load the

remote provider proxy with the provider name and network address as

parameters. The Remote CMPI proxy and daemon already exist as part of

the

SBLIM project; its Location Resolver needs to be adjusted for namespace

based resolution in a Pegasus environment.

Clients written to manage clustered elements can

determine the topology by enumerating the CIM_Namespaces and

CIM_SystemIdentification data, better still, SLP

should be used to query the master CIMOM and its constituent elements,

each with their designated namespace name.

Remotable provider support as implemented by Remote CMPI can be

positioned as an alternative or even the solution for "light weight

Pegasus"

implementations.

Definition of the Problem

- Function is needed to support definition of remote

namspaces in PG_InterOp.

- Remote provider environments supporting clusters of clones will

require updates

to provider registration every time a clone is added. In order to

simplify provider registration in such environments there is

a need to be able to specify generically the namspaces a provider can

work for.

- Executing non-remote-able providers in a namespace designated for

remote operations will cause havoc. Support must be available to

prevent use

of non-remote-able providers in namespaces designated for remote

operations.

Proposed Solution

As mentioned above, the bulk of Remote CMPI support is already

available and in use. The areas

needing modifications have been identified and are listed here in more

detail. Remote CMPI uses the normal CMPI provider. The proxy mechanism

is implemented as a generic CMPI provider. Registration for remote CMPI

providers all use the same Location pointing to the Remote CMPI proxy

provider.

[ (r_kumpf) What happens if a

non-remote CMPI provider registers a capability in a remote namespace?

(schuur) What is a non-remote

CMPI provider ? All CMPI providers are remotable.

Remote Namespace Designation

Support to designate Remote Namespaces is provided by 2 new control

providers for CIM_SystemIdentification and CIM_SystemInNamespace.

CIM_Namespace has to be changed to create persistent instances in

PG_InterOp, which it does not at the moment. During cimserver startup

instances of these classes will be queried to determine the namespace

constellation. The internal namespace representation , class NameSpace

in NameSpaceManager.cpp, will be updated to maintain and make available

a remote-namespace indicator.

Generic Namespace Registration

Generic namespace registration support requires changes in

ProviderRegistration support and ProviderManagerService. Especially in

clusters of identical clones it will be tedious to update Namespace

specification in PG_ProviderCapabilities whenever new nodes are added

to the cluster. For this reason generic registration support should be

offered using the following format:

(r_kumpf) This seems like a risky

policy. Providers are automatically signed up for namespaces they know

nothing about. If I add a new namespace, I may or may not be able to

register my provider in that namespace, based simply on the namespace

name I've chosen.

(schuur) Providers normally don't

care about the namespace they execute in. Those who do and check for

this will terminate.

Namespaces = { root/cimv2,

root/cimv2/cluster39/* };

Related services in Pegasus must be adjusted to

support this type of specification.

[ (r_kumpf) A

PG_ProviderCapabilities instance is specific to instrumentation for a

single CIM class. Does this mechanism allow for registration of a

provider for that one class in a whole set of namespaces?

(schuur) Yes, instead of saying

Namespaces=(a/1, a/2, a/3 a/4); You can say Namespaces=(a/*); (I used

parenthesis instead of curly brackets.)

[ (r_kumpf) Do you have specifics

about what services are affected?

(schuur) Provider lookup services.

Remote Namespace Integrity Enforcement

Remote namespace integrity enforcement will be provided by

ProviderManager2/ProviderManagerService.cpp. Pluggable Provider

Managers will be required at creation time to indicate whether they

support remote providers or not. The PegasusCreateProviderManager()

signature will be extended by a boolean reference parameter that will

be used to indicate remote namespace support. This indicator is used by

ProviderManagerService.cpp to determine whether CIM operation requests

for a namespace that is designated as a remote namespace is supported

by this ProviderManager. ProviderManagerService will make this data

available to the ProviderRegistrationManager who, in combination with

NameSpaceManager support mentioned above, will reject provider

registration requests of providers in remote namespaces for which the

corresponding ProviderManager has no support.

[ (r_kumpf) The proposed solution

at tag 71 indicates that support for remote CMPI providers is

implemented by a generic CMPI provider. What is special about the CMPI

Provider Manager that enables this feature?

(schuur) Generic CMPI providers

are defined in the CMPI specifications. Generic CMPI providers get

additional data from the the provider Manager that is used to drive the

remote site.

Schedule

The

proposal is to have this implemmented for 2.4.

Discussion

- How does the remote daemon get

started? Stopped?

That

depends on the remote system; the daemon normally is started like most

daemons as part of the OS bring-up process. The daemon will terminate

when the OS shuts down. It is assumed that the OS will restart the

daemon when it fails.

- What are

actual namespace topologies being proposed?

No topologies are enforced by this

proposal. What counts for Remote CMPI is (the last qualifier of)

the namespace name in the relevant CIMObjectPath. When the

CMPIProviderManager

detects that this points to a designated remote namespace it will

contact the proxy

provider who will call the remote daemon. A simple namespace topology

would be root/cimv2/systemA, root/cimv2/systemB, etc. One can organize

remote systems by rooting them in an intermediate namespace, like root/cimv2/cluster/1systemA,

root/cimv2/cluster1/systemB, etc.

- Its

great the the remote cmpi proxy and daemon are already in SBLIM -- so

you'd move them (copy them) to OpenPegasus CVS, yes?

Correct, using the usual Pegasus license.

- Is there any remote CMPI

specific way of preventing multiple CIMOMs from using the same remote

CMPI provider?

There is no support to prevent multiple CIMOMs to access a provider.

There might be reasons to allow this (failover scenarios). I guess you

are thinking about restricting this. We might want to control this

using additional configuration/policy support. The deamon is able to

distinguish CIMOMs, especially when SLL support is provided, with this

we can control access to the daemon.

- Do you know the size of the

remote CMPI runtime (at runtime)?

The code size is well

below 100K (using size). Virtual/Real (using ps aux) 1500K/500K for the

remote broker vs 15100K/6700K for cimserver, both measured after

startup.

- I'd like to see some text

discussing the special security considerations when provider is

remote.

- What protocol is used to

communicate between the provider manager and the agent on the

remote system? Is it in XML or something else? If XML, is the schema

the same as that used by CIM? Or some subset?

- Is there any kind of

authentication of the server connecting to the remote provider? What

provisions are made for privacy and integrity of the communcations?

- Is SSL used? Or maybe TLS?

Or something else?

The current implementation

uses a binary protocol over normal IP sockets, the data items are

essentially CMPIData elements. An alternative could be using the gSoap

package.

(r_kumpf) Since this point was

not addressed, is it assumed that the CIM Server is not required to

authenticate when connecting to a remote provider?

(schuur) No authentication takes

place at the moment, the SSL layer will facilitate this.

The communication layer is 'pluggable' and can be replaced by any other

protocol. Using a SSL based communication layer will be rather trivial,

but not committed at this point.

A ticketing scheme is used to make sure that asynchronous 'up-calls'

(CIMOMHandle calls) can be authenticated.

- I don't see a configuration option to disallow remote support. In

my case, I wouldn't want to deploy remote CMPI unless I had a secure

communications layer between the CIM server and the remote agent. While

it may be 'trivial' to do, creating it is not a part of this proposal.

Therefore, I'd like to see a configuration option to disable this

feature.

A configuration option will be provided to disable Remote CMPI proxy

provider usage and configuring namespaces using

CIM_SystemIdentification and CIM_SystemInNamespace.

Copyright (c) 2004 EMC Corporation;

Hewlett-Packard Development Company, L.P.; IBM Corp.; The Open Group;

VERITAS Software Corporation

Permission is hereby granted, free of charge, to any

person obtaining a copy of this software and associated

documentation files (the "Software"), to deal in the Software without

restriction, including without limitation the rights to use, copy,

modify, merge, publish, distribute, sublicense, and/or sell copies of

the Software, and to permit persons to whom the Software is furnished

to do so, subject to the following conditions:

THE ABOVE COPYRIGHT NOTICE AND THIS PERMISSION

NOTICE SHALL BE INCLUDED IN ALL COPIES OR SUBSTANTIAL PORTIONS OF THE

SOFTWARE. THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF

ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE

WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE

LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION

OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION

WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

Template last modified: January 20th 2004 by Martin Kirk

Template version: 1.6